Unless hindered in some way, AI capabilities will keep increasing. If some quantity X keeps increasing faster than some quantity Y, eventually it will be much larger than Y. Thus, eventually AI capabilities will be much larger than human capabilities. This is a logical necessity, independent of how capable you think AI is at this moment.

All the talk about whether AI can really think, or how well it currently performs on this or that test are essentially distractions. At most these considerations have importance for the time frame.

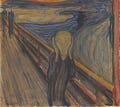

Another logical necessity is that something that is much more capable than us, can risk harming us. To convince yourself of this, try and imagine an entity that is 10,000x as intelligent and capable as all of humanity.

There are many arguments for why a super intelligent AI may not want to harm us. But it is impossible to avoid the fact that it is a risk. Something much more powerful than us has the potential to harm us. Also, once there is a new superintelligent being, it is hard to predict how exactly it will behave or be motivated. We can think that we will decide its motivation and behavior, and set up unbreakable rules for it. But this is something we have never tried before, and something we cannot even imagine in a meaningful way. The chance that there is something we haven’t thought of in this future scenario is extremely high. And the chance that this thing we haven’t thought of will lead to bad outcomes is at least present. It’s also possible that we only get one chance. If the alignment is not right the first time, the superintelligent AI may kill all humans, and there is no second chance.

Another argument that people forward against AI risk is that AI is simply a tool, and that it does not have agency in any shape. However, what happens inside modern AIs is a black box. We do not understand what goes on in there, in the way we can understand a traditional program by reading the code. What we know is the output it gives. If we look at evolution, biological creatures have evolved to have agency. This is because agency turned out to be an effective way for the creatures to accomplish their evolutionary goals of surviving and reproducing. If this happened in the evolution of carbon-based beings, it could also happen in the evolution of silicon-based beings. Possibly, just as developing agency helped biological creatures to achieve their goals, so could developing agency help an AI to achieve its goals.

A different angle of viewing the issue, is that many knowledgable people think that there is a risk of AI catastrophe. See this petition. And if that is the case, then there is a risk, even if you don’t think that is the case. Because if smart people disagree with you, there is a risk that you are wrong. And a risk of a risk is a risk.

From here, a common argument is that it is a risk we should take. The argument goes that the risk of human extinction is small, and the potential gains from AI is very large, so we should go ahead. An issue with this argument, is that there are fates worse than death. One possibility is that the AI can cause suffering through indifference. Human minds could be trapped in some sort of stasis; not living, but not dying. A year living in such a state would be horrible. Now try and imagine existing in this way for a hundred million years.

What should we do then? I posit that the answer is quite obvious: at some point we must try to halt AI advancement. The risk of having an intelligence vastly more capable than us is too large.